Abstract

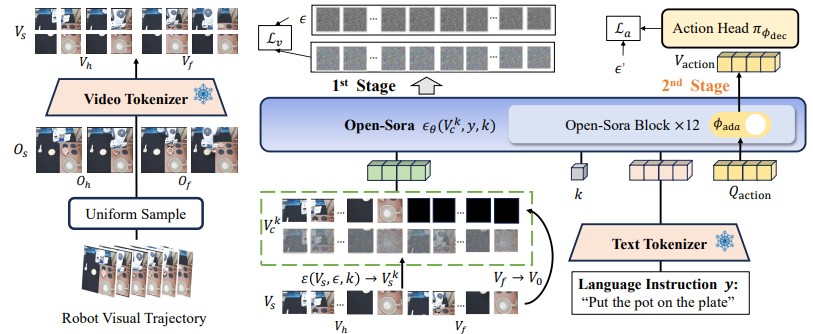

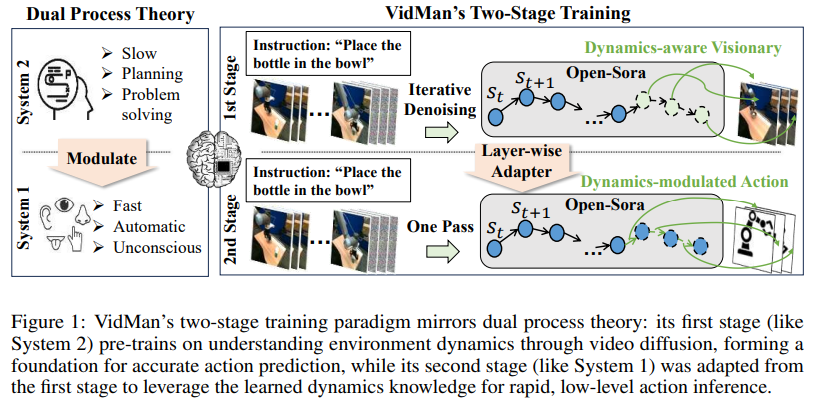

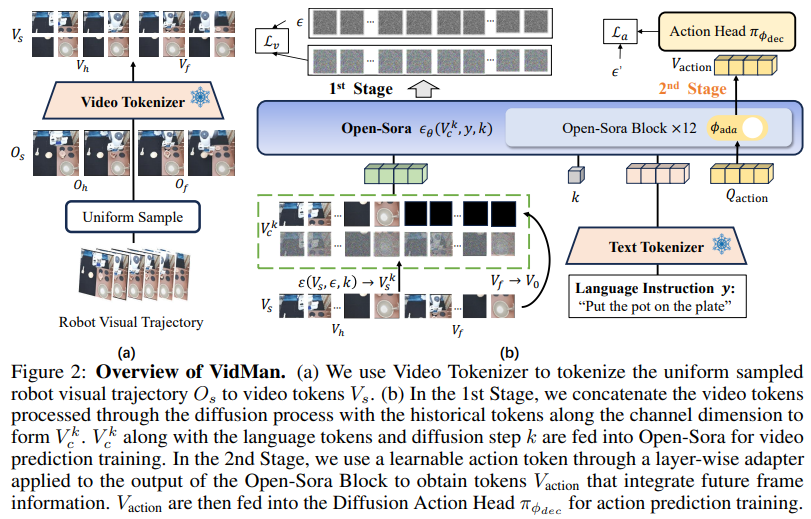

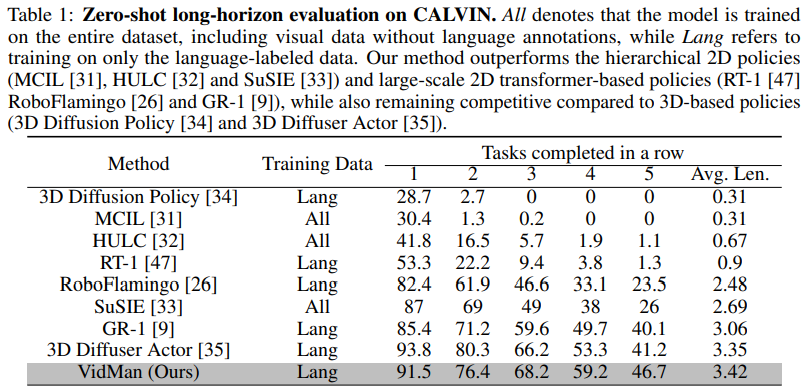

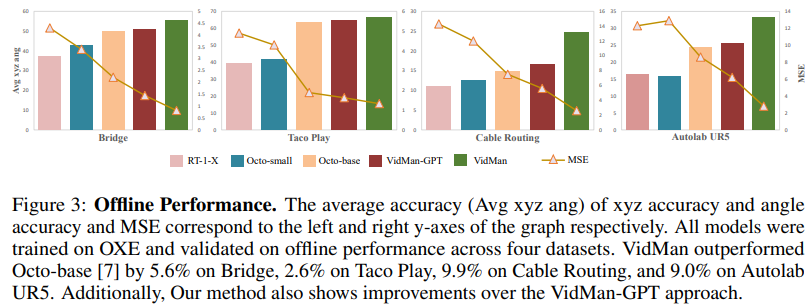

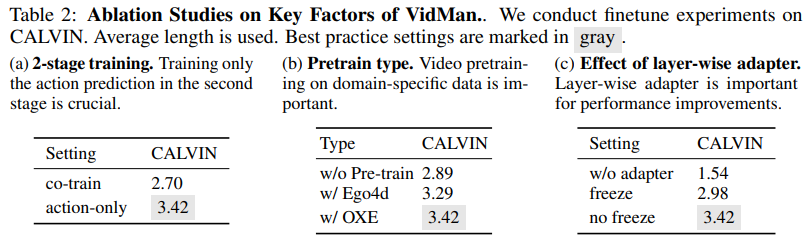

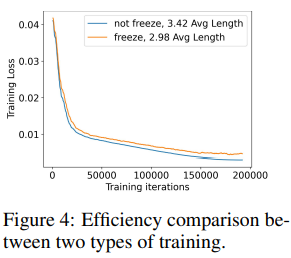

Recent advancements utilizing large-scale video data for learning video generation models demonstrate significant potential in understanding complex physical dynamics. It suggests the feasibility of leveraging diverse robot trajectory data to develop a unified, dynamics-aware model to enhance robot manipulation. However, given the relatively small amount of available robot data, directly fitting data without considering the relationship between visual observations and actions could lead to suboptimal data utilization. To this end, we propose VidMan (Video Diffusion for Robot Manipulation), a novel framework that employs a two-stage training mechanism inspired by dual-process theory from neuroscience to enhance stability and improve data utilization efficiency. Specifically, in the first stage, VidMan is pre-trained on the Open X-Embodiment dataset (OXE) for predicting future visual trajectories in a video denoising diffusion manner, enabling the model to develop a long horizontal awareness of the environment's dynamics. In the second stage, a flexible yet effective layer-wise self-attention adapter is introduced to transform VidMan into an efficient inverse dynamics model that predicts action modulated by the implicit dynamics knowledge via parameter sharing. Our VidMan framework outperforms state-of-the-art baseline model GR-1 on the CALVIN benchmark, achieving a 11.7% relative improvement, and demonstrates over 9% precision gains on the OXE small-scale dataset. These results provide compelling evidence that world models can significantly enhance the precision of robot action prediction.

Framework

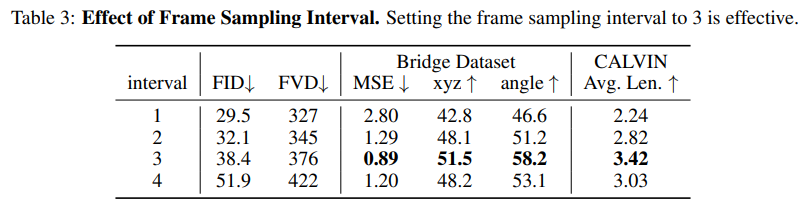

Experiment

Conclusion

In this paper, we propose VidMan, a novel framework utilizing video diffusion models for robot imitation learning, which addresses the limitations of current GPT-style paradigms in real-time applications. By combining a Dynamics-aware Visionary Stage, which develops a deep understanding of environment dynamics through pre-training on the Open X-Embodiment dataset, with a Dynamicsmodulated Action Stage that efficiently integrates this knowledge into action prediction, VidMan achieves both high precision and computational efficiency. This two-stage approach, ensures robust and rapid action generation, significantly improving performance on benchmarks like CALVIN and the OXE dataset. In the future, we will expand VidMan to be able to perceive more dimensions of information.

Acknowledgement

This work was supported in part by National Science and Technology Major Project (2020AAA0109704), National Science Foundation of China Grant No. 62476293, National Key Research and Development Program (Grant 2022YFE0112500), National Natural Science Foundation of China (Grants 62101607), Guangdong Outstanding Youth Fund (Grant No. 2021B1515020061), Shenzhen Science and Technology Program (Grant No. GJHZ20220913142600001), Nansha Key RD Program under Grant No.2022ZD014, The Major Key Project of PCL (No. PCL2024A04, No. PCL2023AS203).