Abstract

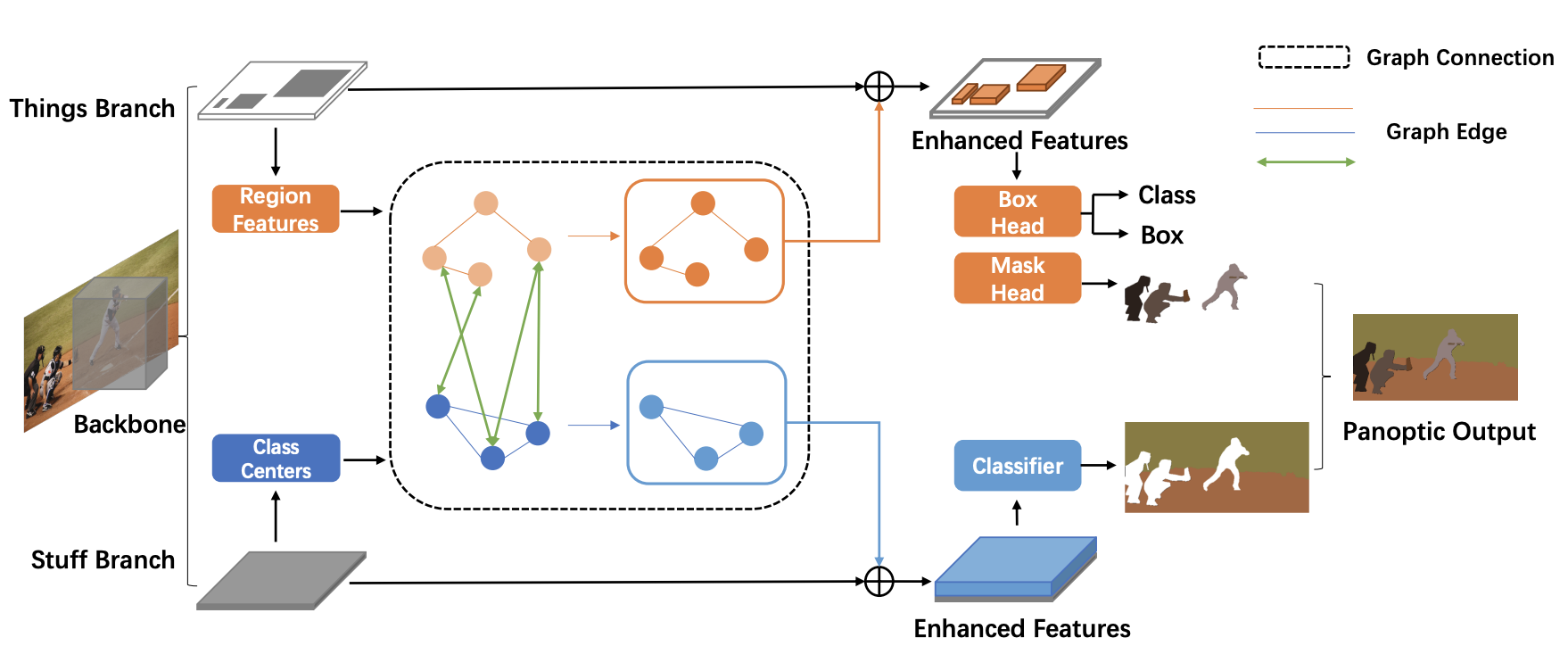

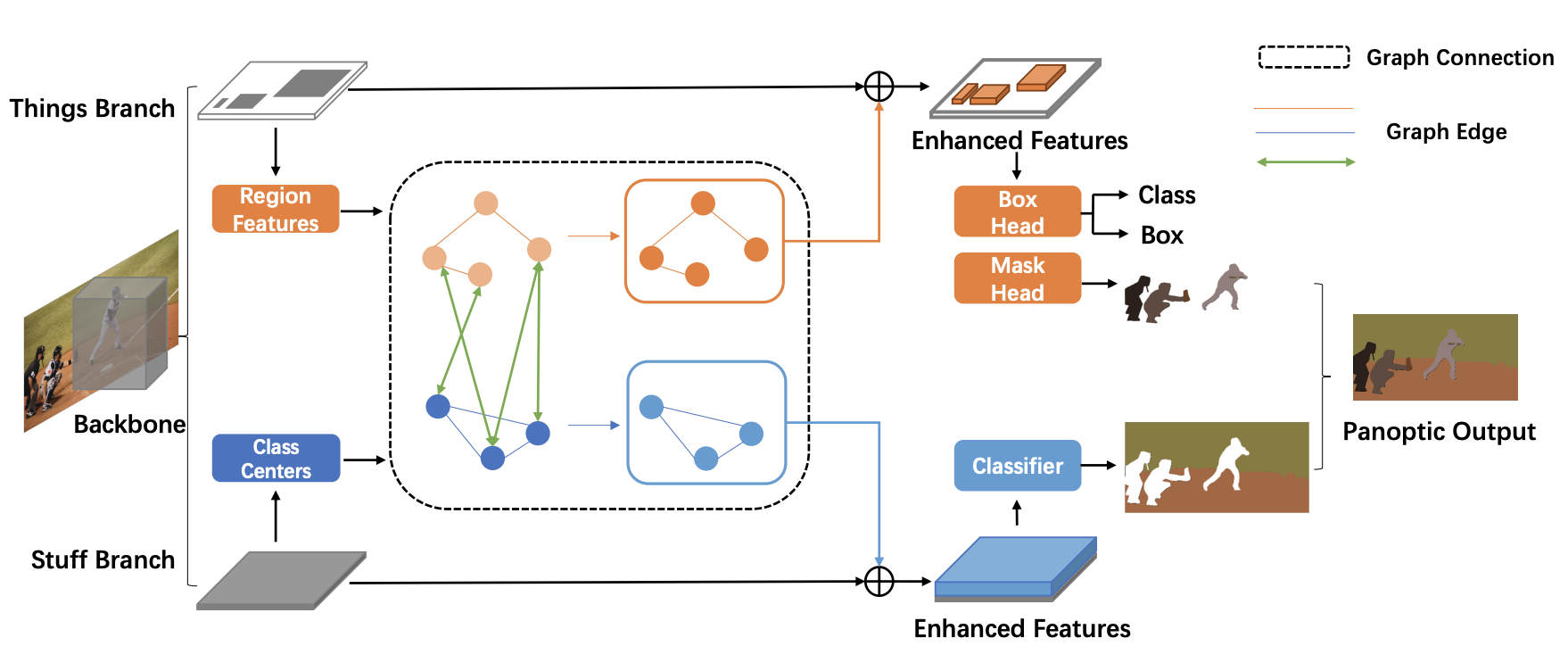

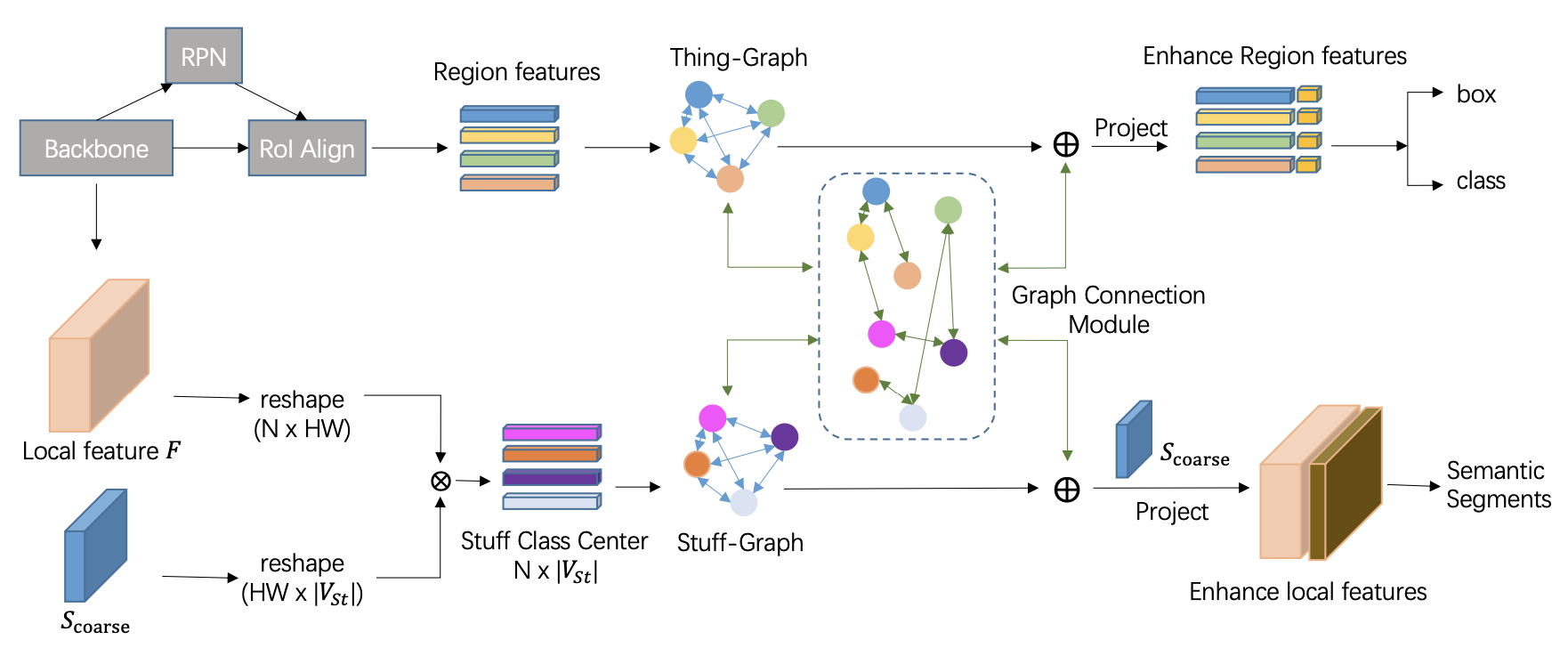

Recent researches on panoptic segmentation resort to a single end-to-end network to combine the tasks of instance segmentation and semantic segmentation. However, prior models only unified the two related tasks at the architectural level via a multi-branch scheme or revealed the underlying correlation between them by unidirectional feature fusion, which disregards the explicit semantic and co-occurrence relations among objects and background. Inspired by the fact that context information is critical to recognize and localize the objects, and inclusive object details are significant to parse the background scene, we thus investigate on explicitly modeling the correlations between object and background to achieve a holistic understanding of an image in the panoptic segmentation task. We introduce a Bidirectional Graph Reasoning Network (BGRNet), which incorporates graph structure into the conventional panoptic segmentation network to mine the intra-modular and intermodular relations within and between foreground things and background stuff classes. In particular, BGRNet first constructs image-specific graphs in both instance and semantic segmentation branches that enable flexible reasoning at the proposal level and class level, respectively. To establish the correlations between separate branches and fully leverage the complementary relations between things and stuff, we propose a Bidirectional Graph Connection Module to diffuse information across branches in a learnable fashion. Experimental results demonstrate the superiority of our BGRNet that achieves the new state-of-the-art performance on challenging COCO and ADE20K panoptic segmentation benchmarks.

Framework

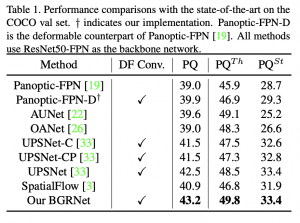

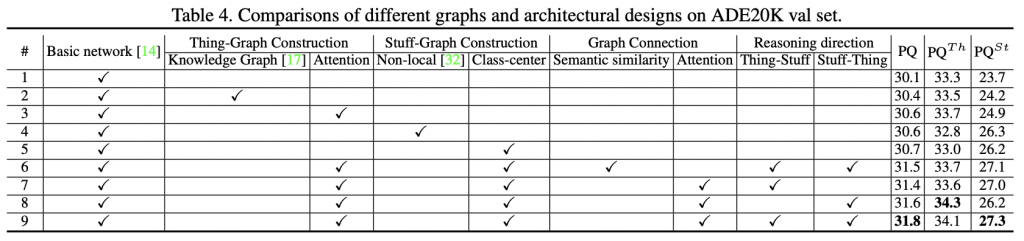

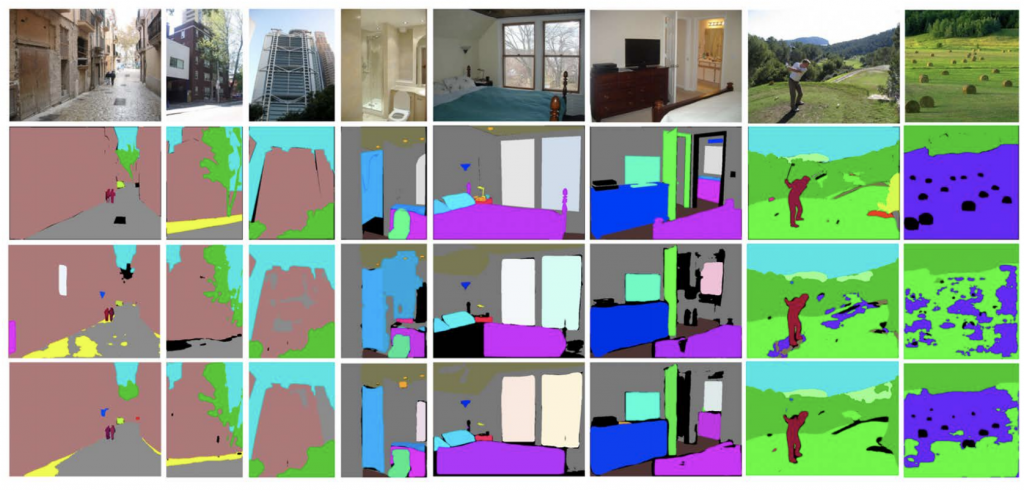

Experiment

Conclusion

This paper introduces a Bidirectional Graph Reasoning Network (BGRNet) for panoptic segmentation that simultaneously segments foreground objects at the instance level and parses background contents at the class level. We propose a Bidirectional Graph Connection Module to propagate the information encoded from the semantic and cooccurrence relations between things and stuff, guided by the appearances of the objects and the extracted class centers in an image. Extensive experiments demonstrate the superiority of our BGRNet, which achieves the new state-of-the-art performance on two large-scale benchmarks.