Abstract

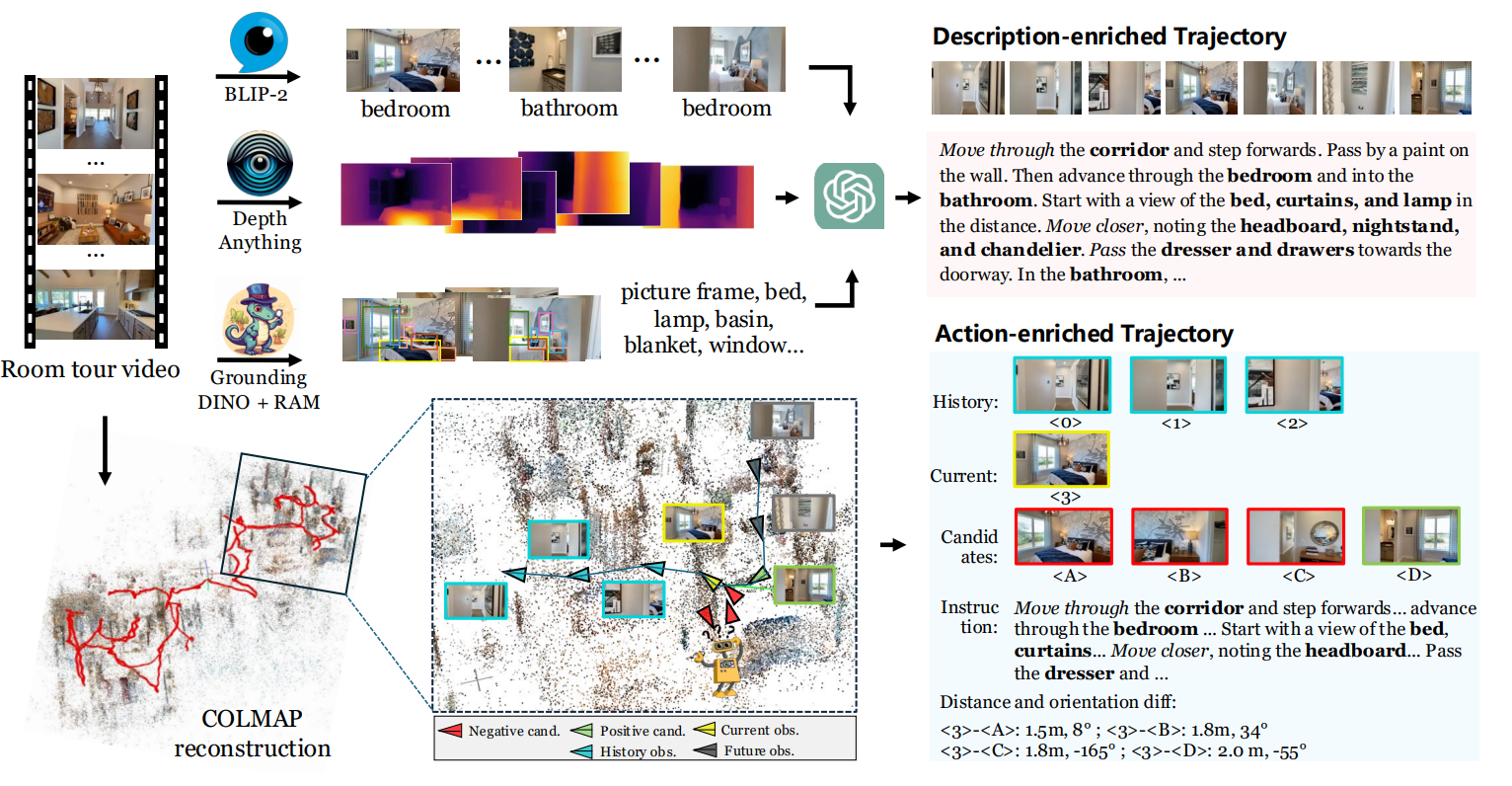

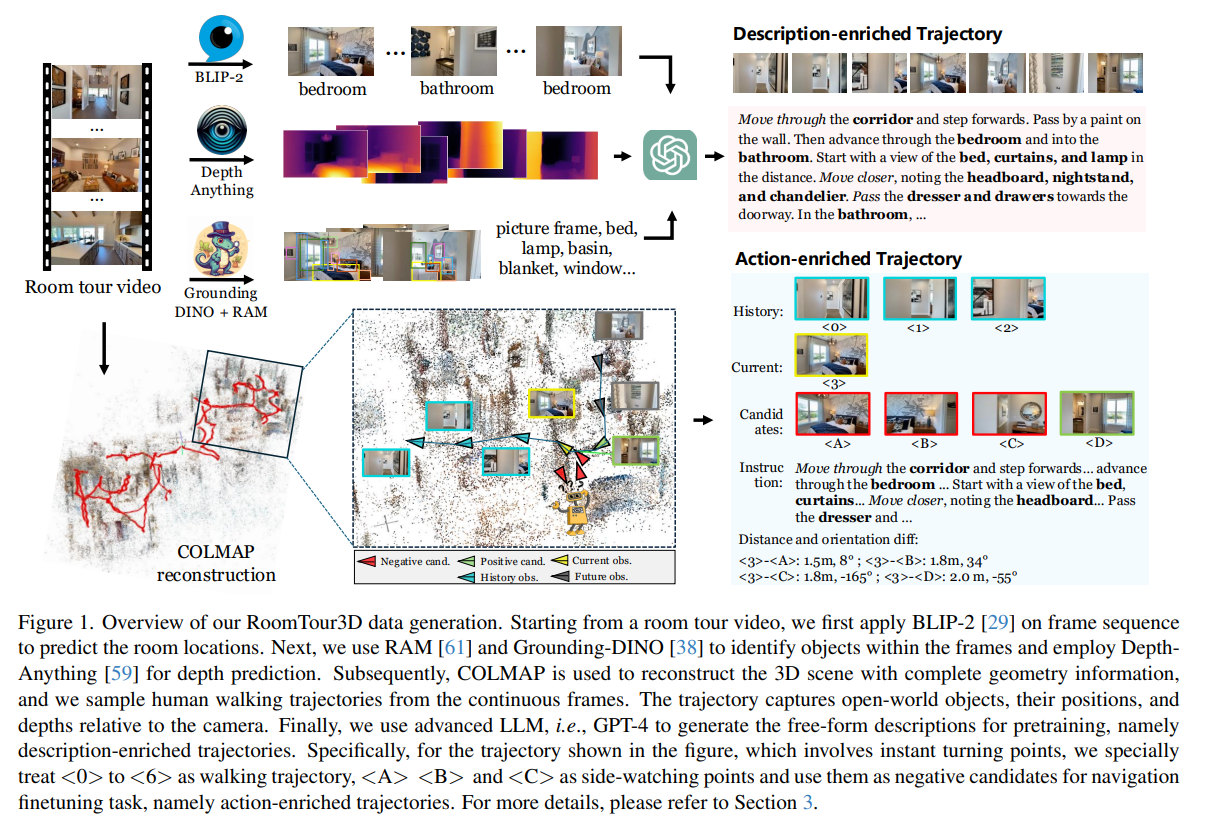

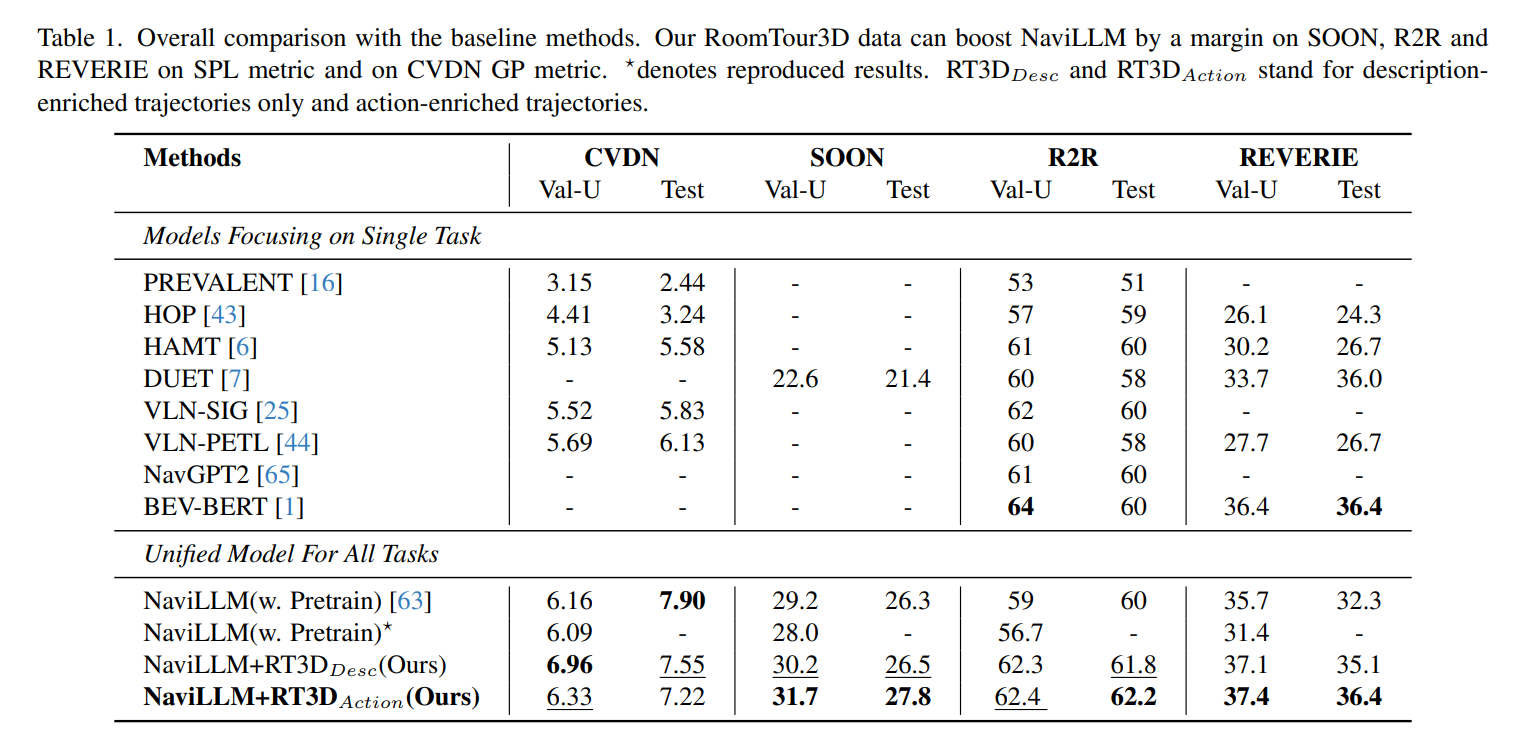

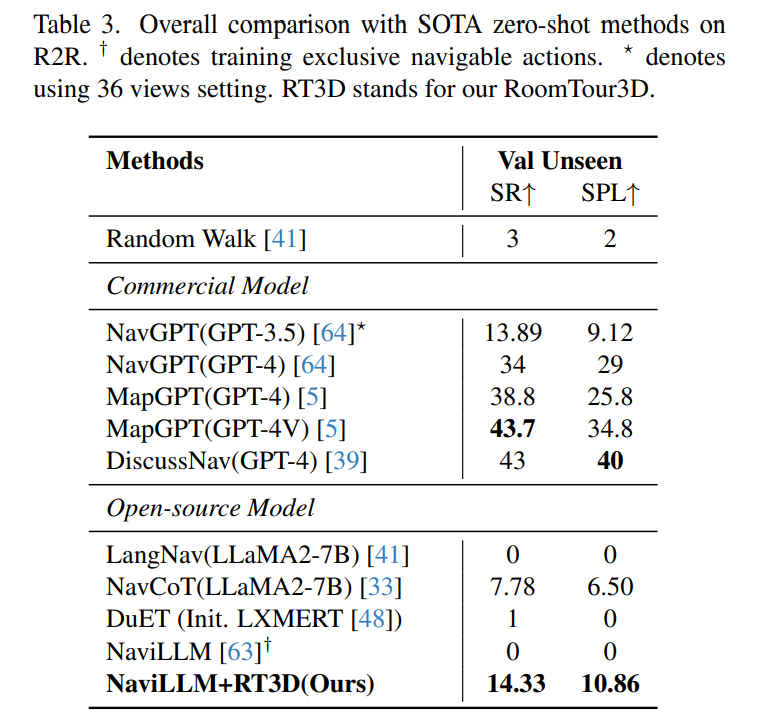

Vision-and-Language Navigation (VLN) suffers from the limited diversity and scale of training data, primarily constrained by the manual curation of existing simulators.To address this, we introduce RoomTour3D, a video-instruction dataset derived from web-based room tour videos that capture real-world indoor spaces and human walking demonstrations. Unlike existing VLN datasets, RoomTour3D leverages the scale and diversity of online videos to generate open-ended human walking trajectories and open-world navigable instructions. To compensate for the lack of navigation data in online videos, we perform 3D reconstruction and obtain 3D trajectories of walking paths augmented with additional information on the room types, object locations and 3D shape of surrounding scenes. Our dataset includes ~100K open-ended description-enriched trajectories with ~200K instructions, and 17K action-enriched trajectories from 1847 room tour environments.We demonstrate experimentally that RoomTour3D enables significant improvements across multiple VLN tasks including CVDN, SOON, R2R, and REVERIE.Moreover, RoomTour3D facilitates the development of trainable zero-shot VLN agents, showcasing the potential and challenges of advancing towards open-world navigation.

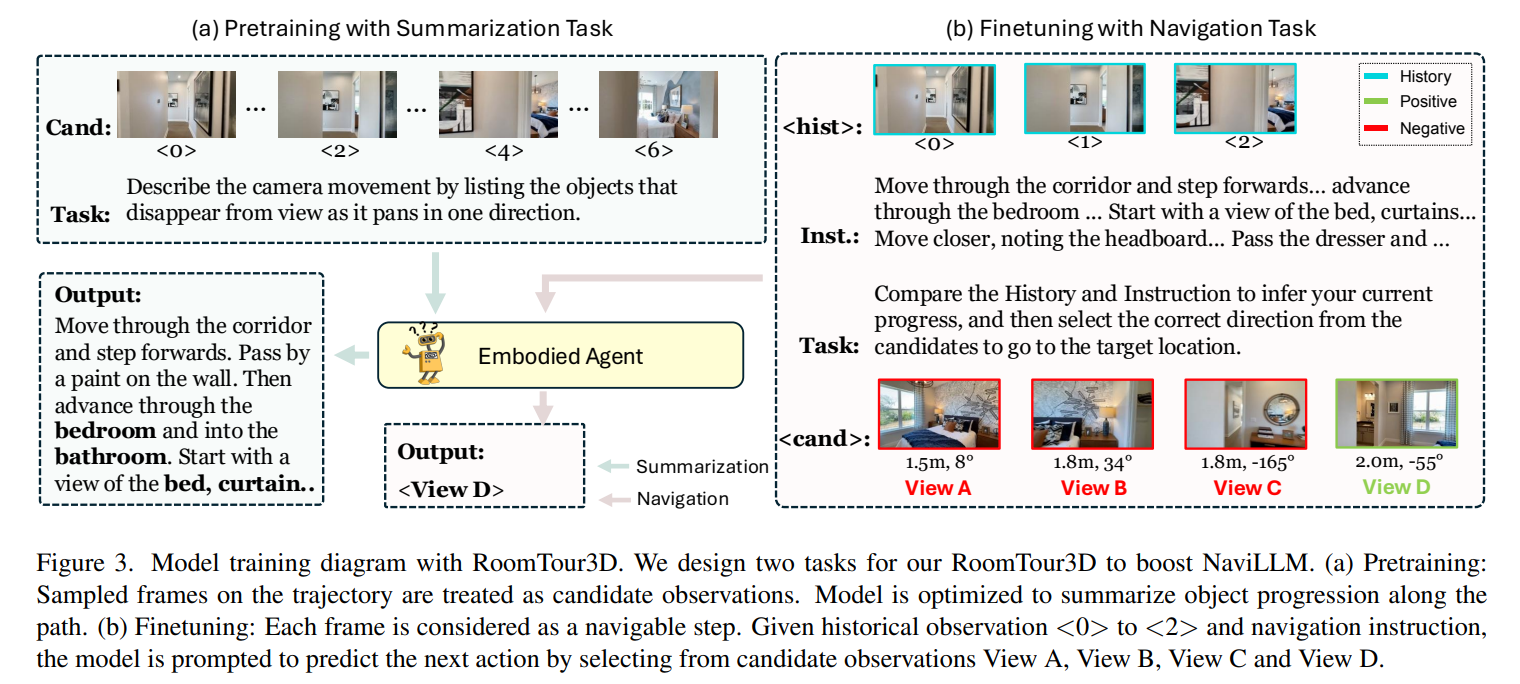

Framework

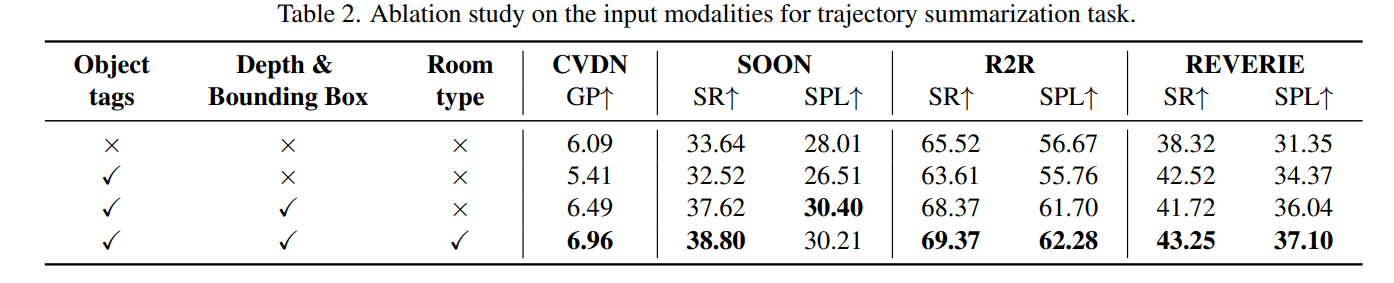

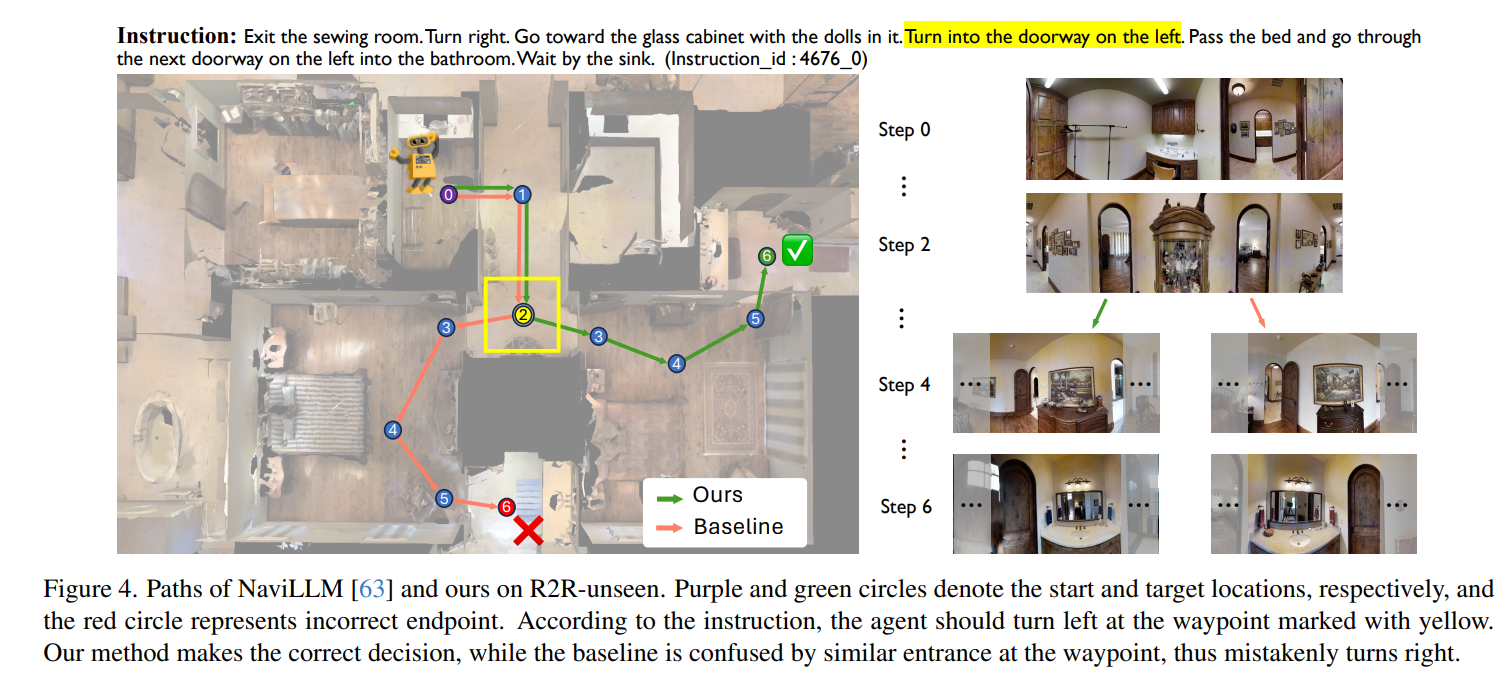

Experiment

Conclusion

In this paper, we present RoomTour3D, a novel dataset automatically curated from room tour videos for VLN tasks. By leveraging the rich, sequential nature of video data and incorporating object variety and spatial awareness, we generate 200K navigation instructions and 17K action-enriched trajectories from 1847 room tour scenes. Additionally, we produce navigable trajectories from video frames and reconstructed 3D scenes, which significantly boost the performance and set new state-of-the-art results on the SOON and REVERIE benchmarks. This approach also enables the development of a trainable zero-shot navigation agent, demonstrating the effectiveness and scalability of RoomTour3D in advancing VLN research.